ChatGPT captured the world's imagination as soon as it was launched on Nov 30, 2022. Within a couple of months, it became the fastest-growing application ever, hitting over 100 million users. With ChatGPT, previously little-known terms like Generative AI, GPT, LLM etc. came to the fore. In this article, we will try to understand what these mean in relatively simple language. Please note that this is in no way meant to be exhaustive.

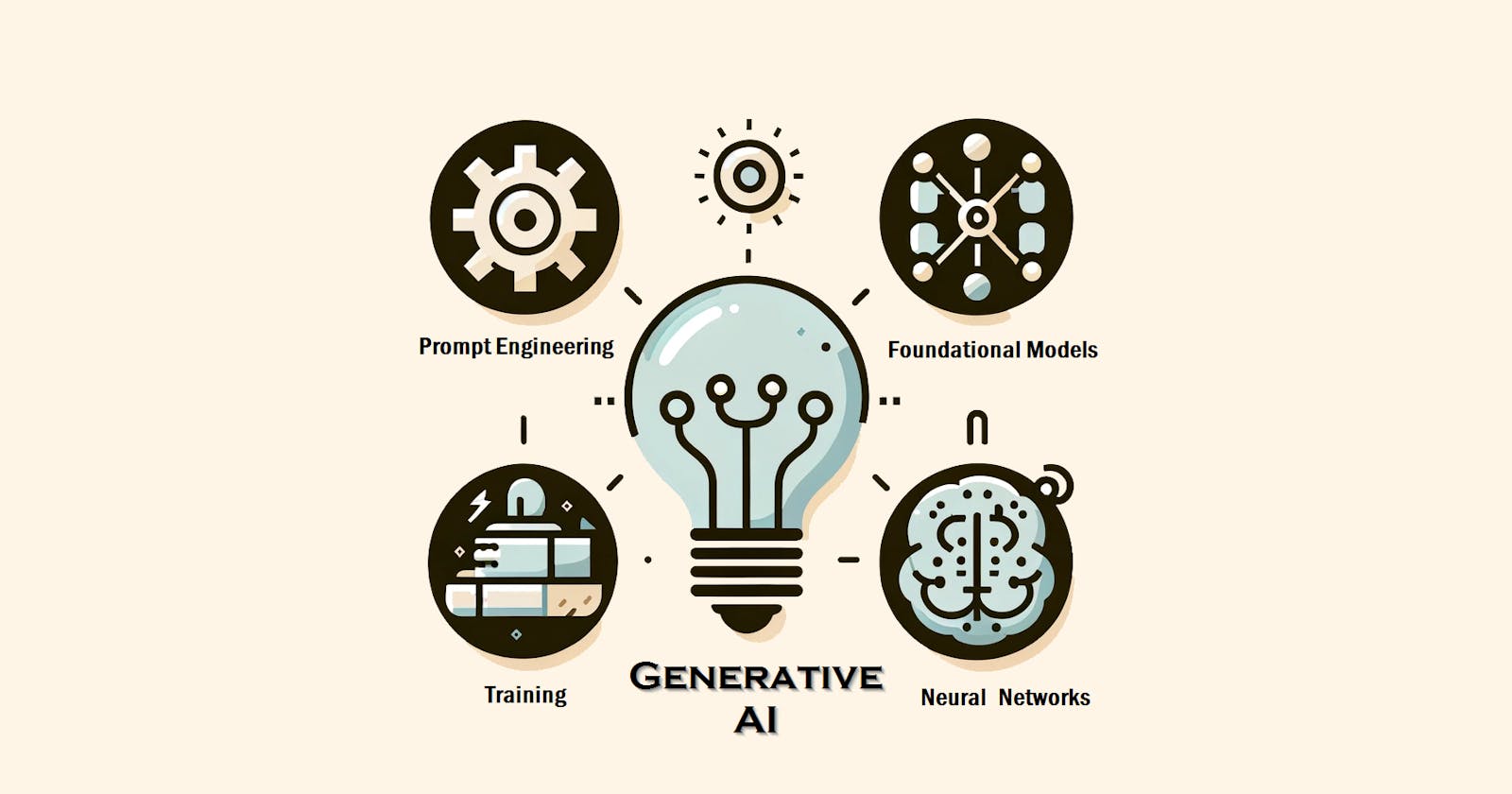

Generative AI

Generative AI refers to machine learning algorithms (ML models) that can create (hence generative) content such as text, images and even music like a real person. These are a major advancement over traditional ML models. Until recently, ML models were limited in what they could do. Traditional models are focused on specific areas such as data analysis, forecasting, image recognition, voice-to-text conversion etc. These models are trained using a structured and labeled data set. In contrast, GenAI models are trained using massive amounts of unstructured data and they are not trained with any specific focus.

Foundation Models

A foundation model is a large-scale model that serves as a base for various applications. These models are trained on diverse and extensive datasets, enabling them to acquire a wide range of skills and knowledge. These models are foundation in the sense that they provide a broad capability base on which specialized functionalities can be built. They can be adapted or "fine-tuned" for specific tasks, making them incredibly efficient for various uses. Foundation Models excel in understanding complex patterns and making predictions, which can be applied in fields like natural language processing, image recognition, and beyond. GPT-4 and DALL-E from OpenAI, Claude from Anthropic, and Llama from Meta are some examples of foundation models.

Large Language Models

Large Language Models (LLMs) are a type of Foundation Model specifically designed for understanding and generating human language. They are trained on vast quantities of text data and can generate coherent, contextually relevant text based on input prompts. These models can do things like answer questions, write stories, or even translate languages. GPT-4 from OpenAI, Claude from Anthropic, and Llama from Meta are some examples of LLMs.

GPT: Generative Pre-trained Transformers

The "GPT" in ChatGPT stands for Generative Pre-Trained Transformers. GPT is a specific series of Large Language Models (LLMs) developed by OpenAI, known for its advanced text generation capabilities. All GPT models are LLMs but not all LLMs are GPT models. ChatGPT is an interactive chat application built on top of a GPT model (currently GPT 3.5 and GPT 4).

Prompt Engineering

Prompt Engineering is the process of composing specific instructions for (i.e. prompting) Generative AI tools to produce the desired output. It involves using precise and clear language to guide the AI tool in producing the desired output. This is often an iterative process involving refining the instructions (prompt) until you get the expected output. Good prompts can significantly improve the possibility of getting the most accurate and relevant responses.

Training and Fine-Tuning

Training refers to the process of feeding a model large amounts of data so it can learn to perform a task. This is the initial training of the model and is very resource-intensive. Fine-tuning, on the other hand, involves adjusting a pre-trained model on a smaller, task-specific dataset to enhance its performance in that specific area.

Neural Networks

While the term "Neural Networks" doesn't come up as often as some of the other terms, no discussion about Generative AI can be complete without it. Neural Networks are at the core of Generative AI. They are algorithms modeled after the human brain, designed to recognize patterns and make decisions based on the data they've been trained on. Neural Networks have been around for decades. The advent of the internet and increasing computational power in the 21st century led to the rise of deep learning, where networks with many layers ('deep' networks) could learn from vast amounts of data. This progress has led to the evolution of Generative AI.